Scale & Modify AI Processes The Easy Way: Plain Text Crushes Hardcoded

Transform your local AI coding assistant workflows into scalable serverless systems using plain-text instructions instead of rigid pipelines. A practical guide to the serverless agent pattern.

The Problem With Local AI Assistants

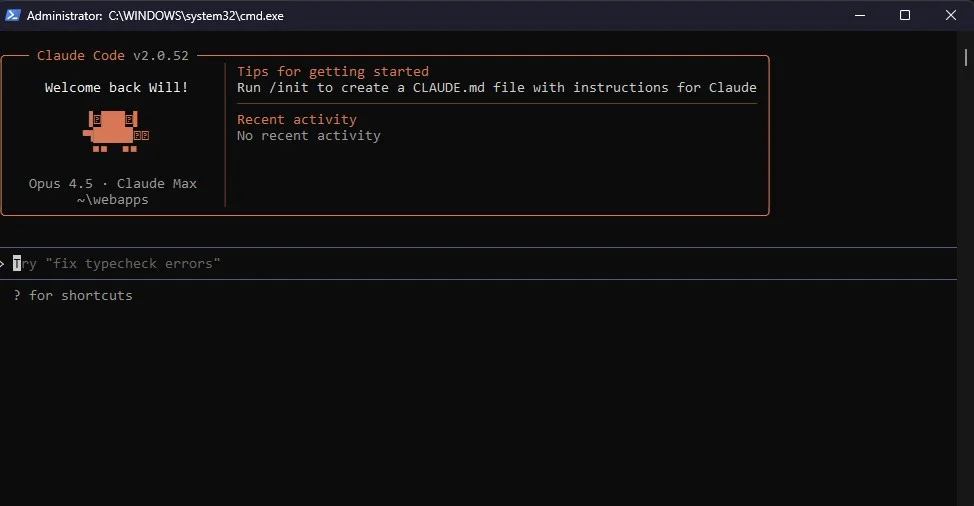

If you've used Claude Code, Cursor, or similar AI coding assistants, you've probably been impressed by their ability to handle complex, multi-step tasks autonomously. They read files, make decisions, run scripts, and adapt their strategy based on intermediate results. I've written about my experience using Claude Code for data analysis - it's genuinely transformative for development workflows.

But here's the fundamental limitation that eventually hits every serious user: these tools run on your local machine.

This means you can only process one job at a time. Your machine must stay on for the duration. You can't integrate with web applications or APIs that need to trigger workflows. There's no horizontal scaling when you need to process hundreds of jobs. And if you want to expose your workflow as an endpoint for users or webhooks? Forget about it.

For personal productivity, none of this matters. But the moment you want to build something real - something users can interact with, something that scales beyond your laptop - local AI assistants become a bottleneck rather than an accelerator.

The Key Insight: It's Not About Execution

Here's what I realized after months of using Claude Code for complex workflow automation: Claude Code's value isn't in its execution environment - it's in its decision-making. The magic isn't that it can run bash commands or read files. The magic is that it can orchestrate complex multi-step workflows, adapt to intermediate results, and make intelligent choices about what to do next.

And that decision-making capability? It's not unique to Claude Code. Any LLM with tool-calling capabilities can do it - Claude's API, OpenAI's function calling, even newer models like Kimi K2. The difference is just the interface and the execution environment.

This realization unlocks something powerful: we can replicate Claude Code's workflow automation in a serverless environment that scales infinitely, triggers from APIs and webhooks, and runs without your laptop being involved at all.

The Serverless Agent Pattern

The pattern is straightforward once you understand it. Instead of Claude Code running locally as the "brain," you use:

- An LLM API (Claude API, OpenAI, Kimi K2) as the decision engine

- A serverless platform (Modal, AWS Lambda) as the execution environment

- Tool definitions that wrap your existing scripts as callable functions

- A context object that holds state instead of the filesystem

The LLM doesn't need to run shell commands or read files directly. It just needs to receive structured data, make decisions, call tools (which are just functions), and repeat until the job is done.

The Real Advantage: No More Hardcoded Pipelines

Here's why this pattern is genuinely transformative for anyone building AI-powered products: you stop hardcoding workflow logic.

Traditional automation tools like n8n or Make.com require you to define every branch, every condition, every decision point explicitly. When your workflow needs to change - and it will - you're back in the node editor, rewiring connections and hoping you didn't break something.

With the serverless agent pattern, your workflow logic lives in a plain-text system prompt. Need to change how the agent prioritizes tasks? Edit the prompt. Want to add a new fallback strategy? Describe it in natural language. The LLM interprets your instructions and makes decisions accordingly.

Key insight: Instead of coding IF-THEN-ELSE logic, you write instructions like "If the extraction confidence is below 80%, flag for manual review. If validation fails, try the alternate parsing strategy." The LLM handles the interpretation and execution.

This is a fundamental shift in how we build automation. The decision logic becomes editable by anyone who can write clear instructions - no programming required. Product managers can tweak workflows. Domain experts can encode their knowledge directly. And when requirements change (which they always do), you update a text file instead of refactoring code.

Want More AI Implementation Strategies?

Subscribe to get practical AI techniques and automation guides delivered to your inbox.

We respect your privacy. Unsubscribe at any time.

How the Transformation Works

Converting a local workflow to a serverless agent involves four main steps. I'll walk through each with practical guidance based on a document processing pipeline I've implemented.

Step 1: Document Your Decision Logic

Before converting anything, you need to write down ALL the decisions your workflow makes. When does it choose option A vs B? What thresholds does it use? How does it handle failures? What's the priority order?

This documentation becomes your system prompt. For a document processing workflow, this might include:

- Document type detection rules (invoice vs contract vs report)

- Quality thresholds (OCR confidence below 85% triggers manual review)

- Processing priority by document type or urgency

- Extraction validation rules and fallback strategies

- Error handling and retry logic for API failures

A comprehensive system prompt might end up being 200+ lines - but written in plain English that anyone on the team can read and modify.

Step 2: Convert Scripts to Tools

Each Python script or command your workflow runs becomes a "tool" that the LLM can call. The tool definition specifies what parameters it accepts and what it returns.

For example, instead of Claude Code running:

python scripts/extract_document.py input.pdf output/extracted.json

You define a tool called extract_document that accepts a document URL and returns structured extraction data. The underlying implementation is the same Python code - you're just wrapping it with a function interface instead of a CLI interface.

The key difference: tools return JSON to the context object instead of writing files to disk. Everything stays in memory during the job.

Step 3: Replace Filesystem with Context

Local workflows constantly read and write files - recognition results, intermediate outputs, temporary data. In serverless, you replace this with a context object that holds state in memory:

class JobContext:

def __init__(self):

self.extracted_data = None

self.validation_results = None

self.processed_items = {}

self.errors = []All state lives in this object during the job. Final results get uploaded to cloud storage (S3, Supabase, whatever) when the job completes. This eliminates the filesystem dependency that ties local workflows to your machine.

Step 4: Build the Agent Loop

The agent loop is surprisingly simple. You send the system prompt and job description to the LLM. If it responds with tool calls, you execute them and return results. If it responds without tool calls, the job is done.

This loop handles arbitrarily complex workflows because the complexity lives in the system prompt, not the loop code. A 10-step workflow and a 100-step workflow use the same loop - just different instructions.

The Scaling Payoff

Once your workflow runs as a serverless agent, you get automatic scaling without any additional work:

- Unlimited concurrent jobs: Each API call spins up its own execution. 1 job or 1000 jobs - same code, automatic scaling.

- API/webhook triggers: Your workflow becomes an endpoint. Stripe payment completed? Trigger a job. User uploaded a file? Trigger a job. Scheduled cron? Trigger a job.

- GPU on demand: Platforms like Modal let you spin up GPU instances for specific tool calls, then release them immediately. You pay for seconds of GPU time, not hours of idle capacity.

- Integration with web apps: Your frontend can call the API, show progress via webhooks, and display results - without your laptop being involved at all.

I wrote about building GPU-powered AI tools with Modal previously - the serverless agent pattern takes this further by adding intelligent orchestration on top of the compute infrastructure.

Real-World Cost Considerations

Let's be honest about costs. Local Claude Code is "free" (included in your subscription). Serverless agents cost money per job.

For a typical document processing workflow, a job might cost:

- LLM API calls (20-50 tool orchestrations): $0.05-0.30

- External APIs (OCR, validation services): $0.02-0.10

- Modal compute (5 min CPU): $0.01

- Total: ~$0.10-0.50 per document

At MuseMouth, I help clients evaluate whether these unit economics make sense for their use case. For a consumer product charging $5+ per use, the margins work. For internal tools processing thousands of low-value items, you might need to optimize more aggressively or use cheaper models.

The key consideration: you're trading subscription costs for per-use costs. For variable workloads, this is often much cheaper. For constant high-volume workloads, dedicated infrastructure might win.

When This Pattern Makes Sense

The serverless agent pattern isn't always the right choice. Here's when it shines:

Good fit:

- Multi-step workflows with 5+ decision points

- External API calls that benefit from rate limiting and async processing

- GPU-intensive tasks where you don't want to pay for idle capacity

- User-facing features requiring webhooks and real-time status updates

- Variable workloads that need to scale up and down

Not the right tool:

- Simple one-shot tasks without meaningful decisions

- Pure code generation where you're just writing files

- Interactive use cases requiring real-time conversation

- Tasks that genuinely need filesystem browsing and exploration

For most production AI applications I've seen, the pattern fits. The workflows are complex enough that the orchestration overhead pays for itself in reliability and maintainability.

Choosing Your LLM

Different LLMs have different strengths for agentic workflows:

- Claude API (Sonnet/Opus): Strong reasoning, large context window, excellent at following complex instructions. My default choice for sophisticated workflows.

- OpenAI (GPT-4): Reliable tool calling, fast, extensive ecosystem. Good for general-purpose agents.

- Kimi K2: Optimized for 200+ tool calls, exposes reasoning traces for debugging. Interesting choice for very long workflows.

- Gemini: Cost-effective, long context. Good for budget-conscious applications.

For workflows with many sequential tool calls, I've tested Kimi K2 because it's explicitly designed for agentic patterns. The reasoning traces are genuinely useful for debugging when things go wrong.

Want More Digital Insights?

Subscribe to get more case studies and practical automation techniques delivered to your inbox.

We respect your privacy. Unsubscribe at any time.

Getting Started: The Implementation Checklist

If you want to try this pattern, here's a practical checklist:

Phase 1: Document

- Write down all decision rules in plain English

- Create threshold tables for numeric decisions

- Document fallback and error handling strategies

- List priority orders for competing choices

Phase 2: Convert

- Convert each script to a tool definition with JSON schema

- Create a context class to hold job state

- Implement tool execution functions

- Build the agent loop with tool calling

Phase 3: Harden

- Add timeout handling (don't let jobs run forever)

- Implement webhook notifications for progress/completion

- Add status update tools so the agent can report progress

- Handle rate limits and implement retry logic

- Add graceful degradation for partial failures

Phase 4: Deploy

- Set up Modal app with secrets

- Create API endpoint for triggering jobs

- Connect to your frontend

- Monitor logs and track costs

The Bigger Picture

The serverless agent pattern represents something larger than just a deployment strategy. It's a shift in how we think about building AI-powered applications.

Traditional software encodes logic in code. This code requires programmers to modify and is brittle to changing requirements. AI agents encode logic in natural language instructions. This means domain experts can modify behavior directly, workflows can adapt to edge cases through reasoning instead of explicit branches, and the same orchestration code works for completely different use cases.

I'm not suggesting we replace all software with agents - that would be absurd. But for complex, multi-step workflows where the logic genuinely benefits from reasoning and adaptation, this pattern offers something traditional automation can't match: flexibility without fragility.

The teams I see succeeding with this approach share a common trait: they understand that the value isn't in the technology itself, but in how it amplifies human expertise. The system prompt encodes years of domain knowledge. The tools wrap existing, proven functionality. The agent simply orchestrates what humans already know how to do - just faster, more consistently, and at scale.

Check Out My Content Creation Tools

I build tools that solve real content creation problems. From AI-assisted blogging to custom song lyrics, many have free trials so you can see the results firsthand.

Explore My Tools →